1 | Foreword

At x-ion we firmly believe in Bluestore as the next big step for our Ceph based object-storage products. Past difficulties in running our clusters were often caused by problems with the Filestore-Technology and especially its underlying filesystem. Filestore would put a lot of strain on our hardware which often resulted in a corrupt filesystem state that was either not recoverable or took a long time to do so.

Bluestore offers an alternative in a Ceph environment as it was written with the special setup and needs of Ceph in mind. No journal file system is used. Instead, writes are made directly to the disc, reducing latency and complexity by removing one layer – the file system itself.

Due to customer demand for more resilience and data safety we started a project to expand our Ceph cluster to a second site. During this project the decision was made to set the second site using Ceph Bluestore instead of Filestore.

This gave us the unique opportunity of directly comparing Ceph Filestore and Bluestore in the same setup with the same load and user interaction.

Beforehand x-ion has done a lot of research and testing to determine the ideal software settings as well as the best performing hardware configuration for our customers’ use cases.

The result of this comparison during the migration period from single-site to dual-site can be found in section 2 and during normal operation in section 3.

In addition, we have also written down our observations on the comparison of Bluestore and Filestore while dealing with or correcting errors/failures. An example of such a failure scenario can be found in section 4.

2 | System performance under high stress during balancing

2.1 Description of system under high stress

As we enabled a second site, 2/3 of the cluster data needed to be rewritten and also one additional copy of each object needed to be written again. In total this meant that all of the used capacity of the cluster had to be written on disc, which was at the time 1293 TiB. This put a lot of strain on the existing Filestore OSDs (Object-Storage-Device) and nodes, as data not only had to be read from them, but also had to be stored on them, as only one replica (431 TiB) would stay in place.

2.2 Phase One – switch from (1)site(3)copies to (2)sites(4)copies

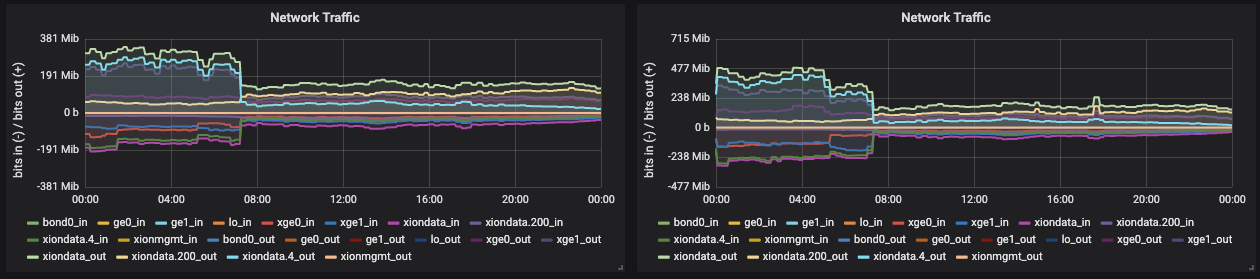

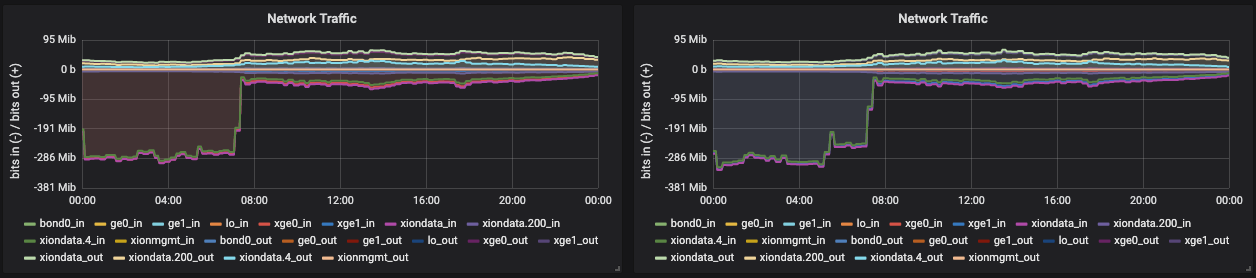

In the days after the switch, the cluster was constantly backfilling with 1 PG (Placement Group) per OSD. As the Bluestore OSDs had not completely replicated PGs yet, they were only performing writes. Which was clearly noticeable in network traffic as the Bluestore nodes had no read traffic.

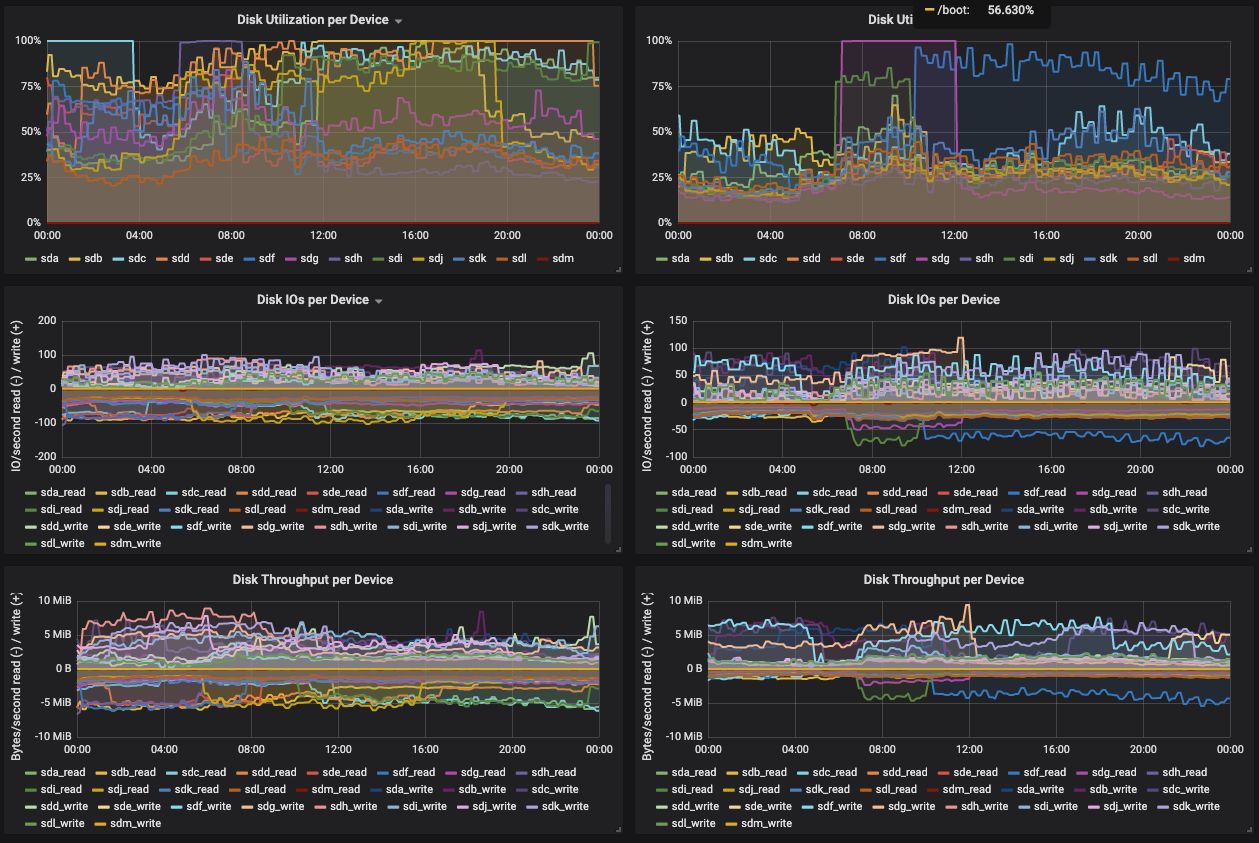

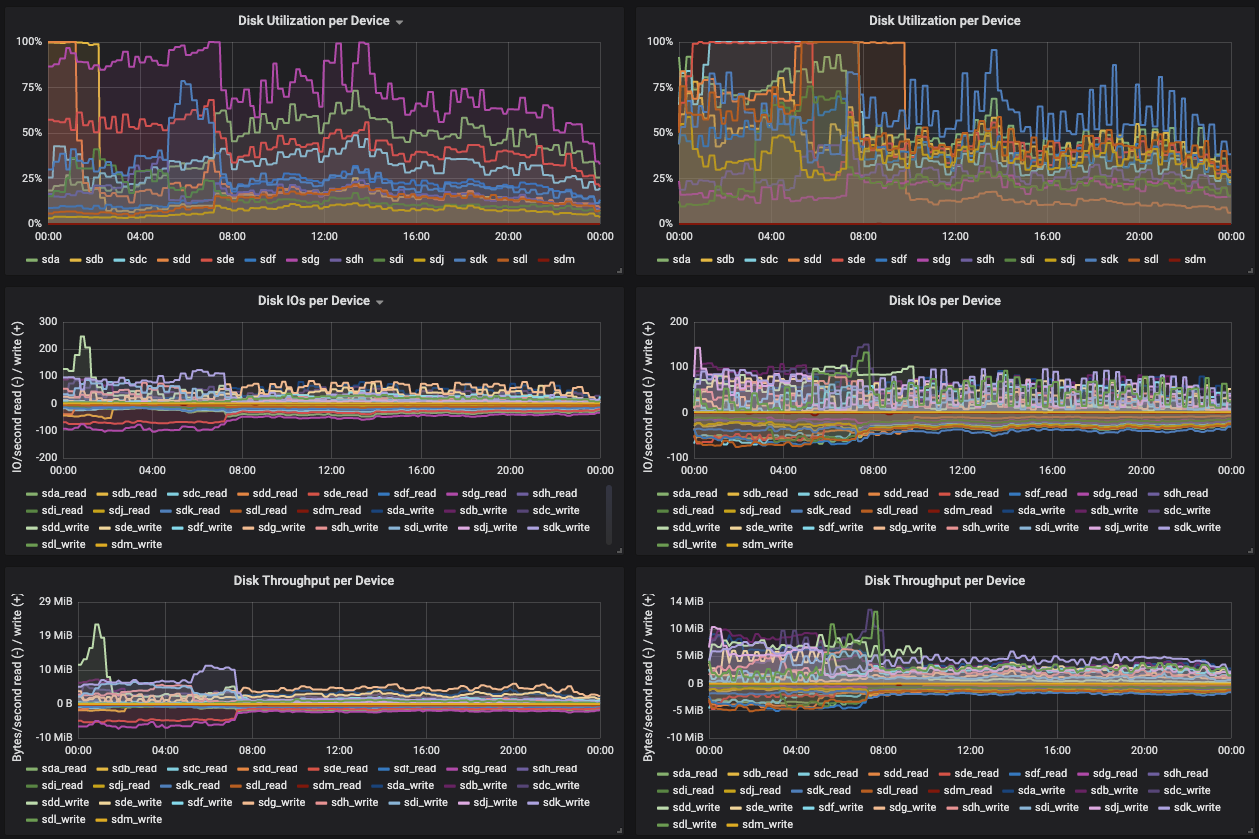

The disc statistics showed very high disk utilization for the Filestore nodes. The Bluestore nodes – despite high write load – showed low disc utilization.

Despite high write rates to one Bluestore node, the load on the node stayed below 5 while Filestore nodes showed a load of above 5 all the time and at times even reaching 50.

2.3 Phase Two – balancing at night

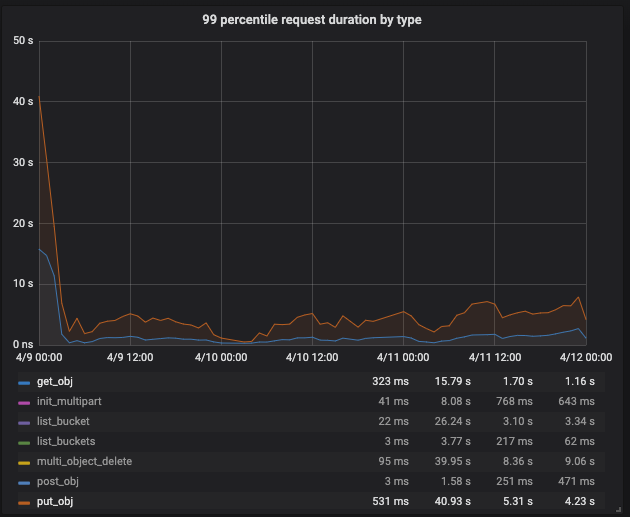

As we continued the balancing, we encountered a problem: Since the PGs also had to be rewritten on the Filestore Nodes, the Filestore directory system grew and – to maintain performance – Filestore (by default) split a directory into 16 smaller directories when it had reached a certain threshold. This caused the PG in question to hang for approx 20 to 40 seconds. We observed this problem especially in the 99th percentile but also saw it affected the mean request latencies:

Since this had a negative impact on our customers, we decided to refill only at night. After a few experiments, we decided to refill Filestore OSDs slowly and Bluestore OSDs at a significantly higher rate.

As then Bluestore nodes had completed the backfilling with PGs they also started to serve reads.

The observations remained the same. Only the load on the Filestore nodes was not as high as before, but still a lot higher than on the Bluestore nodes:

2.4 Phase Three – Increased backfilling during (the) day

Following the decision to backfill only during the nights to help minimize the impact of the migration for our customers and ensure stable smooth operations during the day, the data redundancy degraded due to failing Filestore OSDs and nodes. As backfilling was completely stopped during the main activity/working hours, recovery processes were paused as well. Due to the recovery process being not automatically prioritized by Ceph we manually enforced recovery over backfilling. PGs had been allowed to degrade for a long time and finally were unable to recover anymore. Those PGs also had to be backfilled. This led to a slow degradation of redundancy which by itself was no reason to be worried. However, due to a failure of a filestore node and a wrong decision by the admin on duty, a situation occurred where only one copy of data was left. The mistake resulted in Ceph only having one replica. While this enabled write access to the cluster, after some time it became impossible to recover the PGs without setting the cluster to read-only. In order to recover from this, we performed automated maintenances (agreed time-slots) each night.

The metrics of Filestore and Bluestore have not changed significantly and were essentially the same as before. Therefore, these are not considered. However, we made a comparison of the reliability of these two.

2.5. Reliability of Filestore and Bluestore

The reliability of Filestore nodes has been an issue for quite some time for us. Especially under load Filestore OSDs tend to “flap” which means the process becomes unresponsive for some time, most likely because it is waiting on the underlying disc.

If multiple OSDs processes are hanging the whole node can become unstable as has happened multiple times. If the node fails to become responsive again, and has to be restarted, there is a highly increased chance for the filesystem to become corrupted. A XFS repair takes roughly 12 hours and does not always succeed.

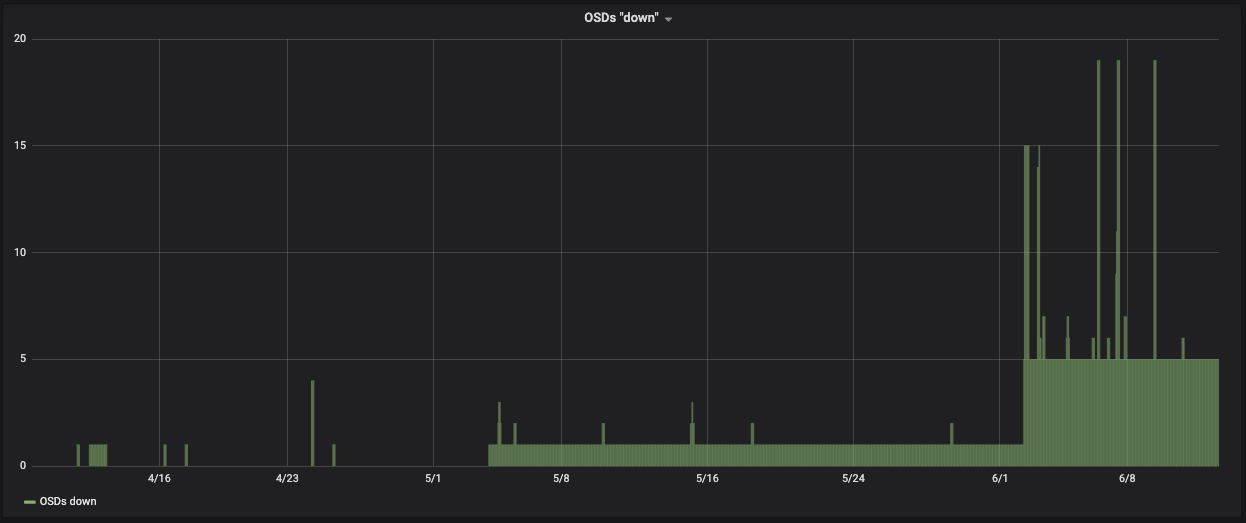

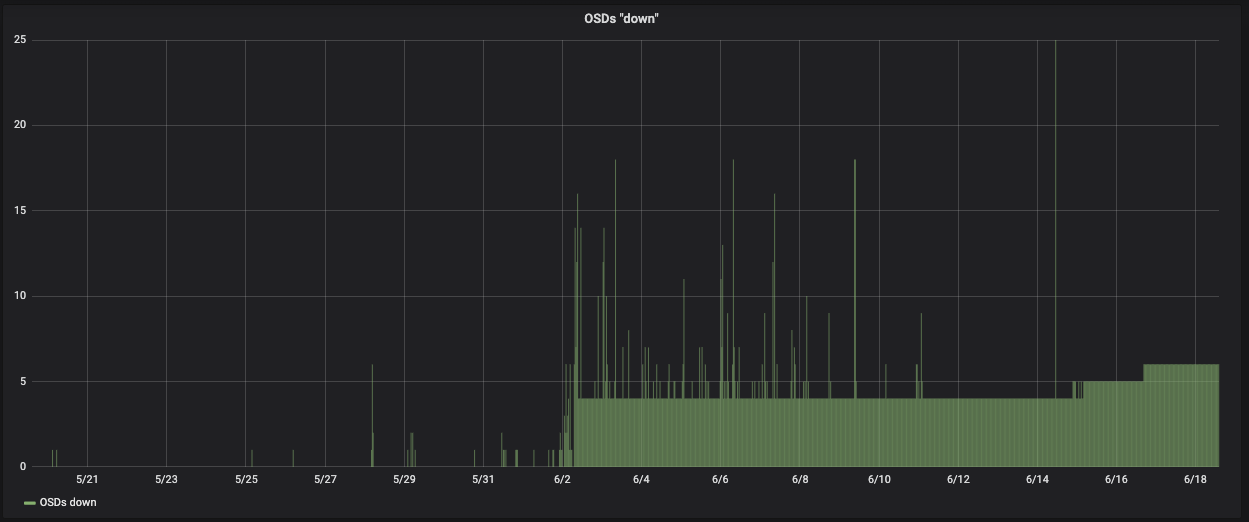

Unfortunately our long-term monitoring data was sampled with a rate too low to really plot OSDs down so it only showed some of the flapping OSDs and nodes during the backfilling process:

Taking a closer look at our short-term monitoring helps to visualize the problem with the high utilization of filestore OSDs and nodes.

During the backfilling process we had flapping OSDs almost on a daily basis. Several times we could even observe a whole node becoming unresponsive. Most of the outages of OSDs and nodes were caused by software. Regarding Bluestore OSDs we did not experience a single software-based failure.

2.6 Assessment

Under high stress Bluestore nodes tend to run smoother with less strain on memory, CPU and disc as compared to Filestore nodes. This is especially noticeable in “soft” failures like unresponsive processes. During the whole backfilling process over two months we did not have any difficulties operating Bluestore nodes, despite putting them under higher load with higher backfilling rates as compared to the Filestore nodes.

3 | Normal operation

3.1 Description of normal operations

During a normal operation we compared node metrics of Filestore and Bluestore nodes.

3.2 Observations for normal operation

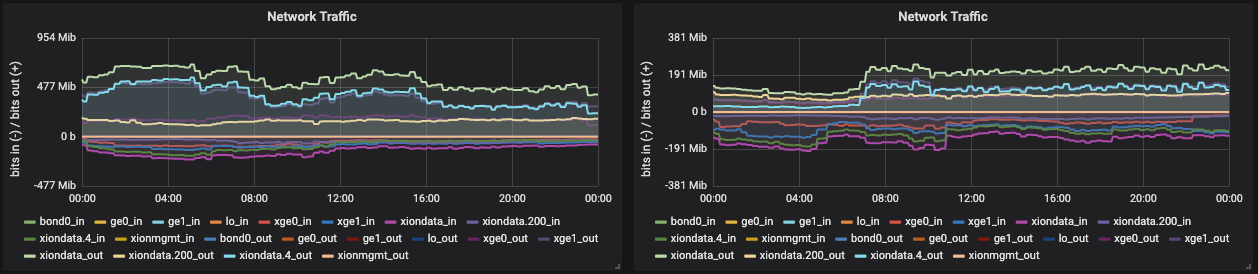

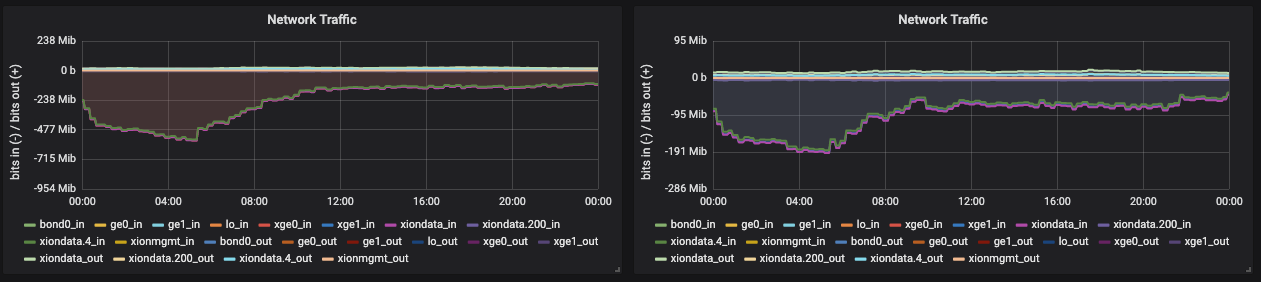

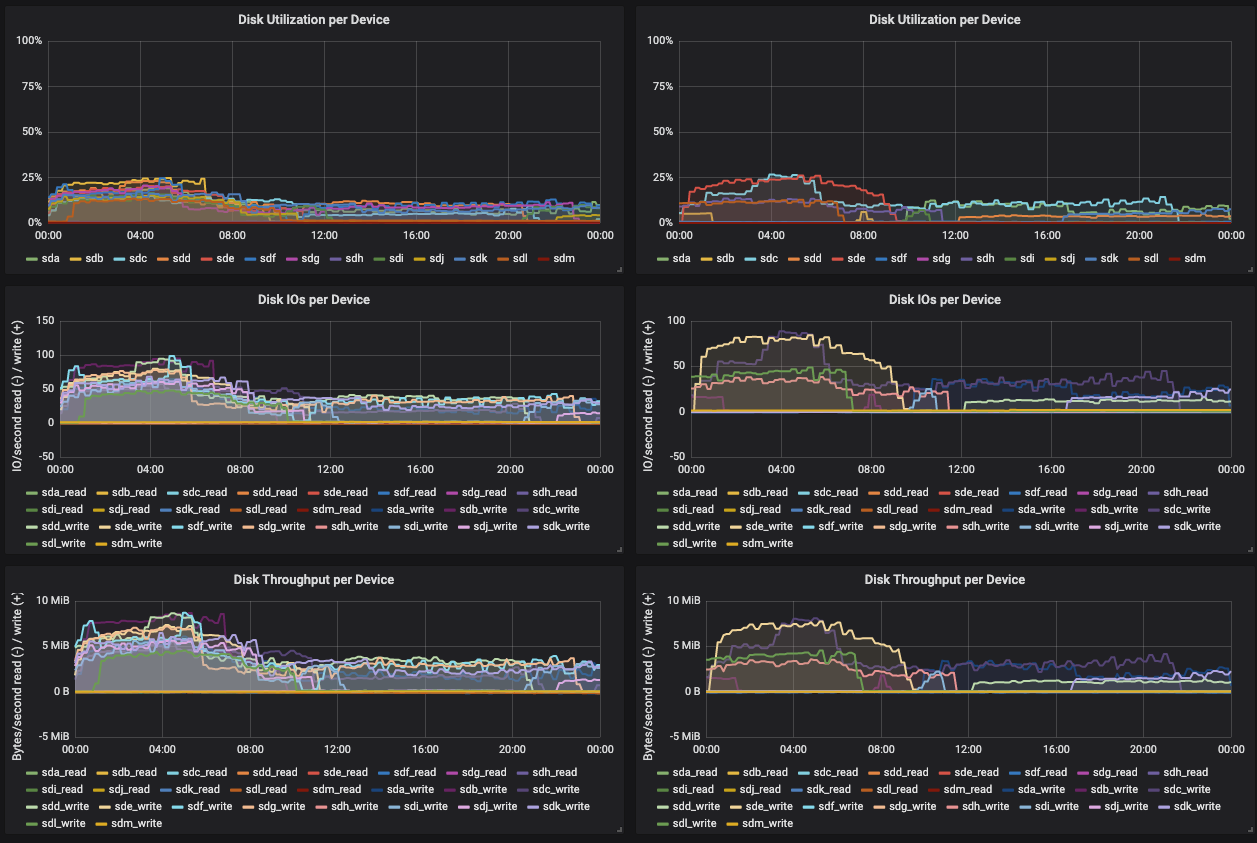

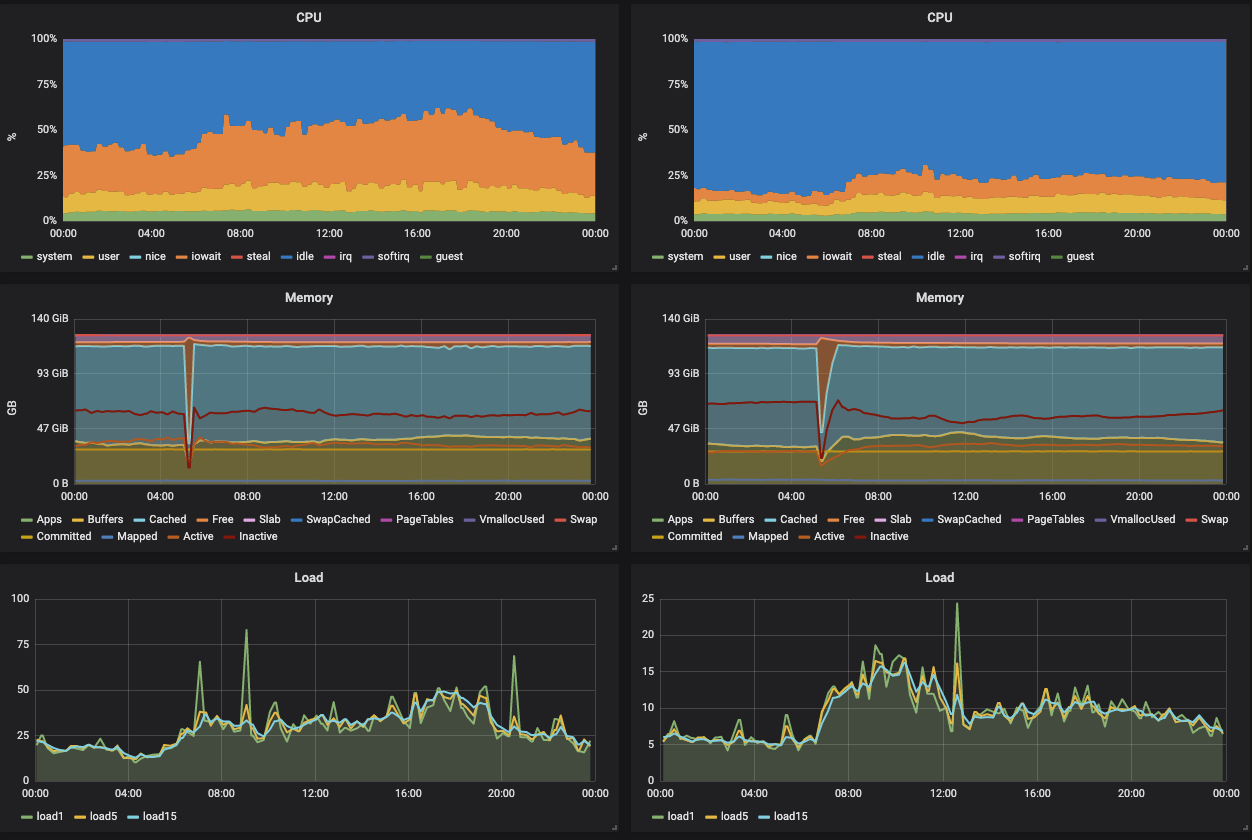

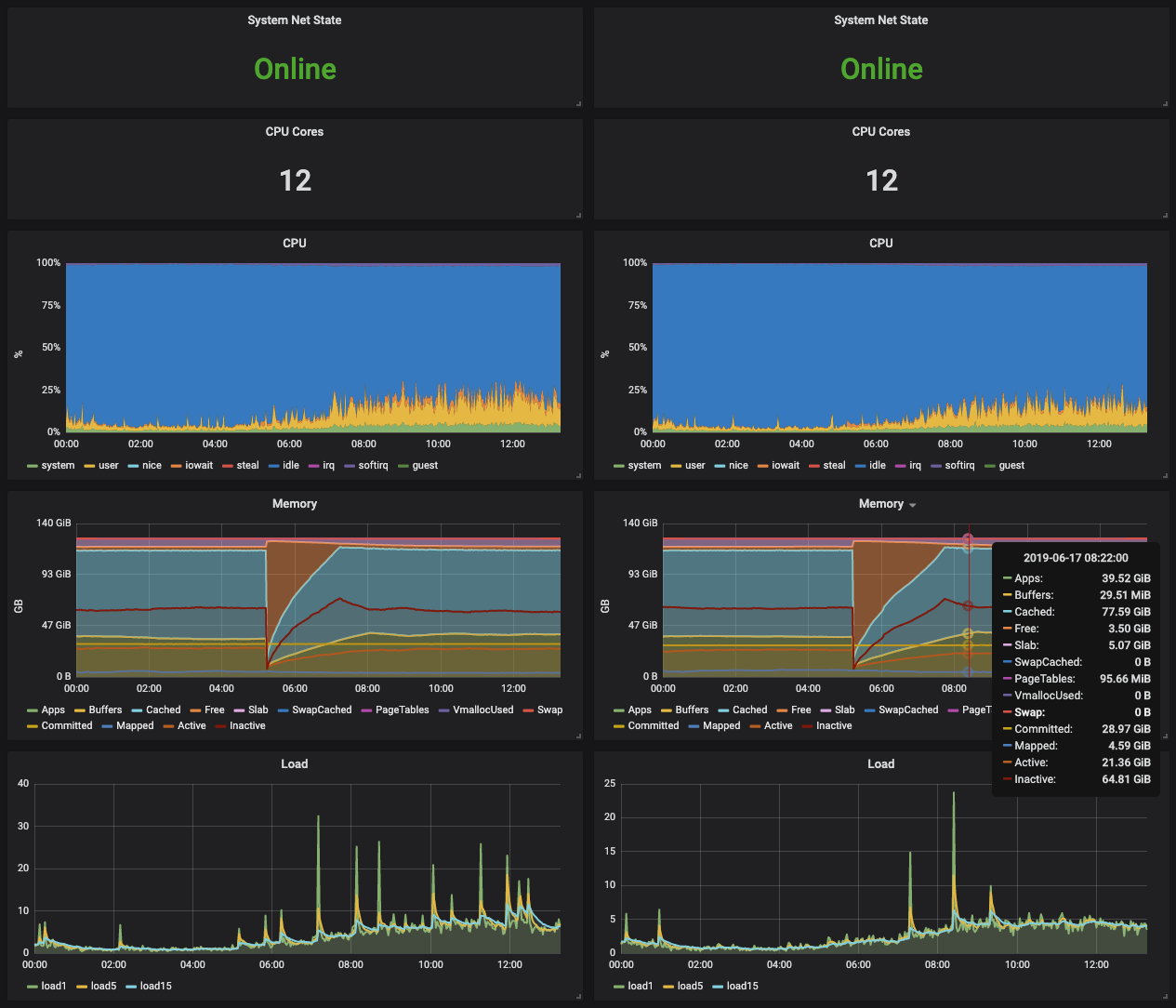

For comparison of Filestore to Bluestore, two nodes from each site had been chosen at random. The results are shown below.

As a first observation we were able to see that Bluestore nodes consume far less memory (~3GiB free to ~56GiB free) and had less load (load above 4 and spiked up to 40 for Filestore vs. load below 4 for Bluestore). The load was different as the Filestore nodes tended to have higher user load and Bluestore nodes tended to have higher iowait. This was most likely caused by the use of NVMes for caching in Filestore and for direct writes for Bluestore.

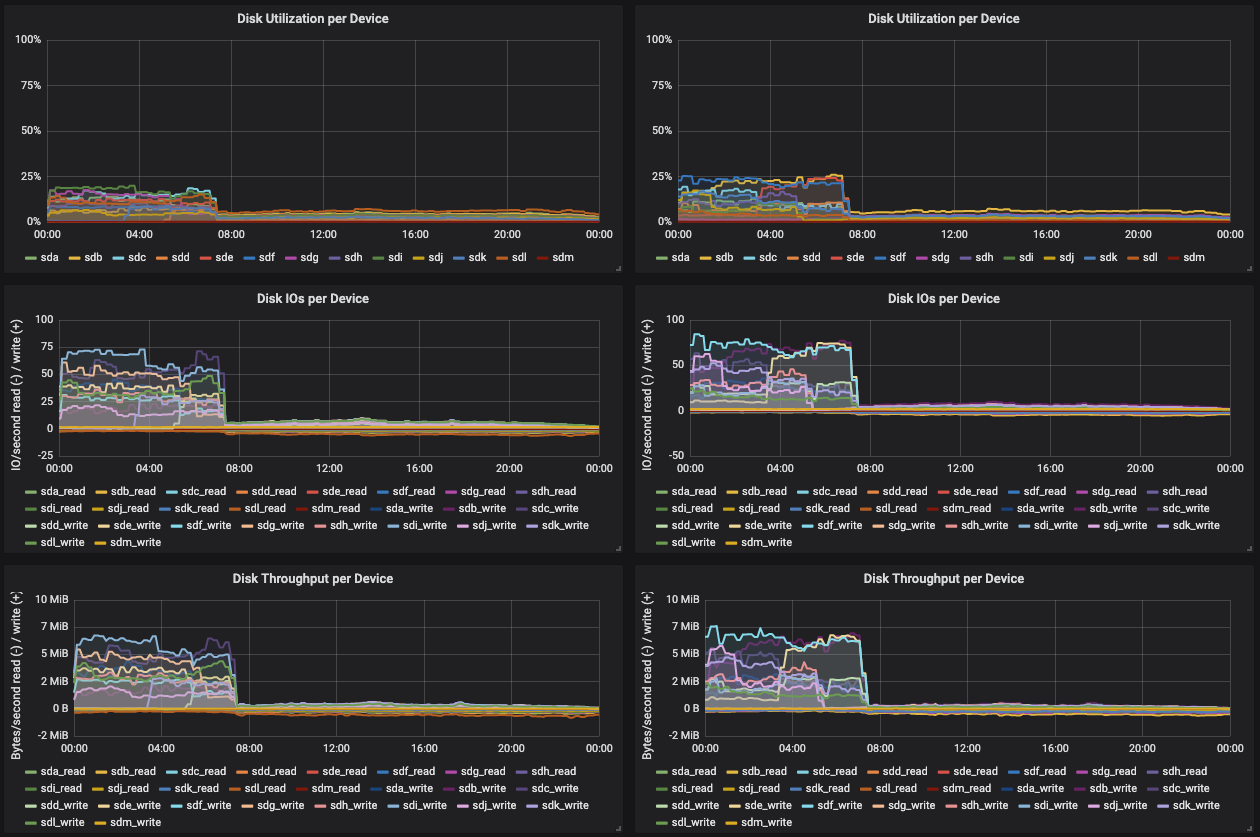

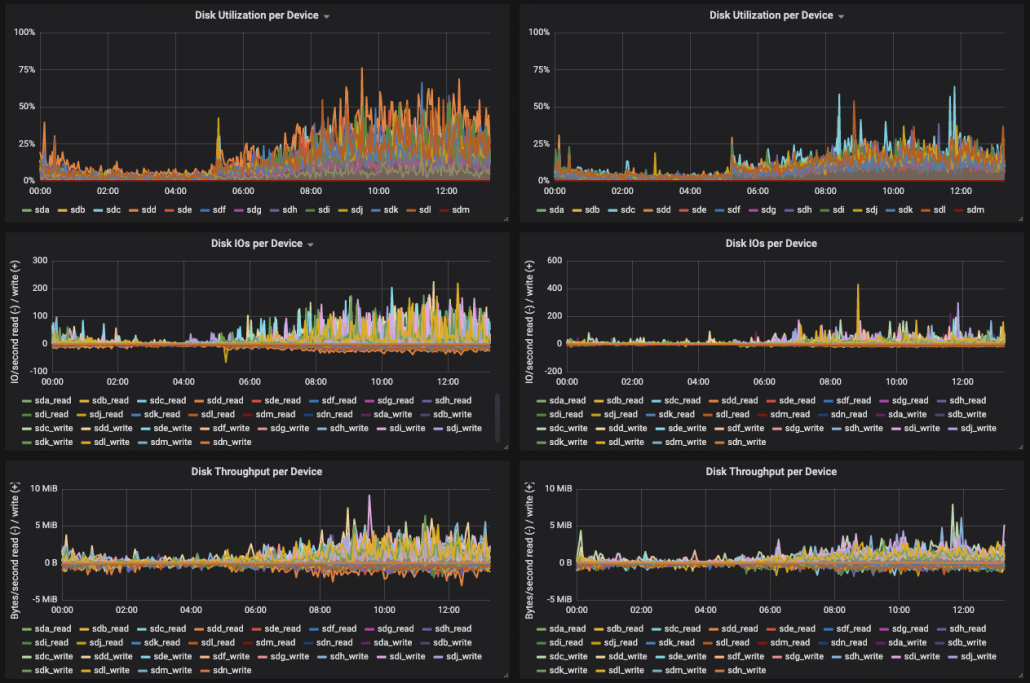

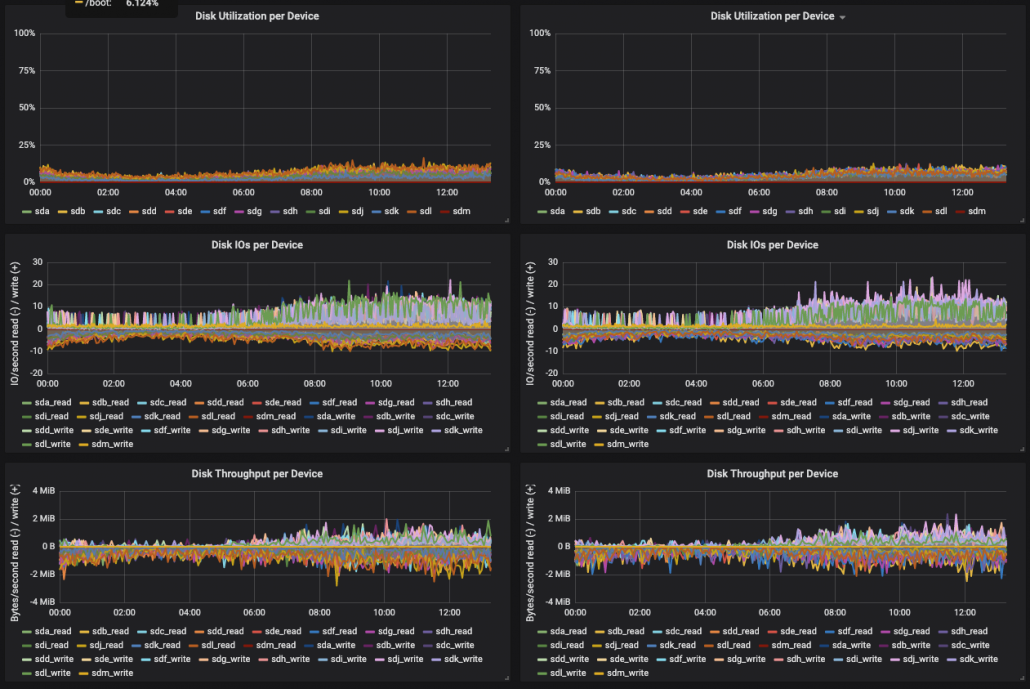

Disc metrics were also different for both types:

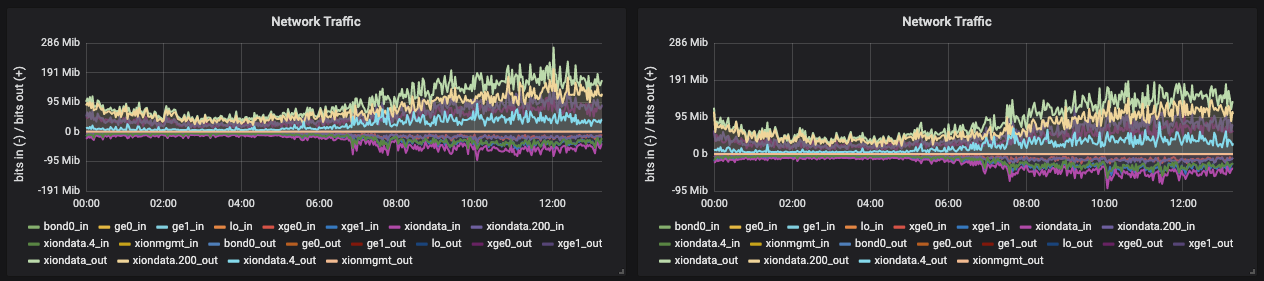

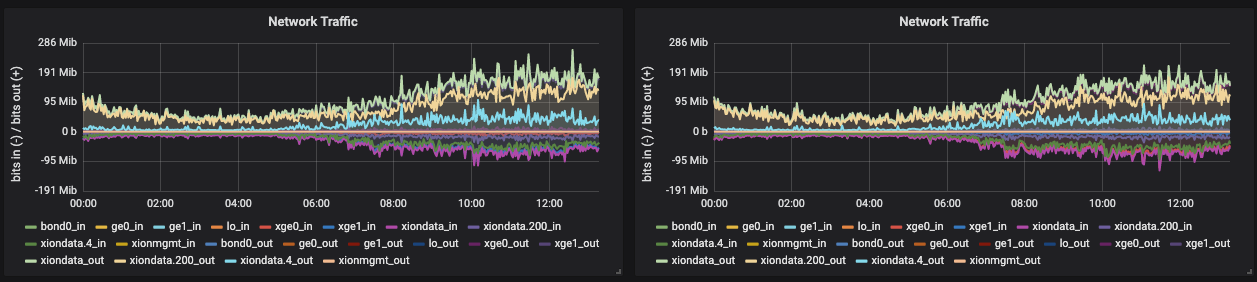

The disk utilization, throughput and IOPS were higher for Filestore nodes in comparison to Bluestore nodes. This indicated a higher strain the journaling filesystem put on the discs as compared to Bluestore, which has no filesystem. Both node types had comparable network traffic:

3.3 Assessments

During normal operations Bluestore nodes tended to run smoother with less strain on memory, CPU and disc as compared to Filestore nodes. Filestore not only put more strain on the hardware but also caused more errors due to the additional layer of complexity due to the use of a filesystem.

4 | Failure Scenarios

4.1 Site Failure

In case of a failure there are two major things to master in service operations: reaction and communication. Keeping this in mind we have been working out failure scenarios which include the action items for our service operations (reaction) as well as the message for the chain behind the services, starting at our service desk and ending at the user (communication). In case of an incident we inform our customers which failure scenario is currently in place. The scenario itself shows our next steps as well as the expected impact. This way the whole service chain is able to move on.

Our failure scenarios are based on proven practical experiences. Especially critical scenarios shall be tested together with our customers (announced failure). Let’s have a look into a complete site failure due to a power outage: The power has been cut off and restored quickly so the hardware began rebooting instantly. After 5 minutes the connection between both sites was restored. After 6 minutes the first intercluster traffic across sites could be observed. After 8 minutes the affected site in the cluster was completely rebooted, all OSDs were UP, all monitors were UP and the cluster was healthy. In fact, it never reported an unhealthy status.

4.2 Observations

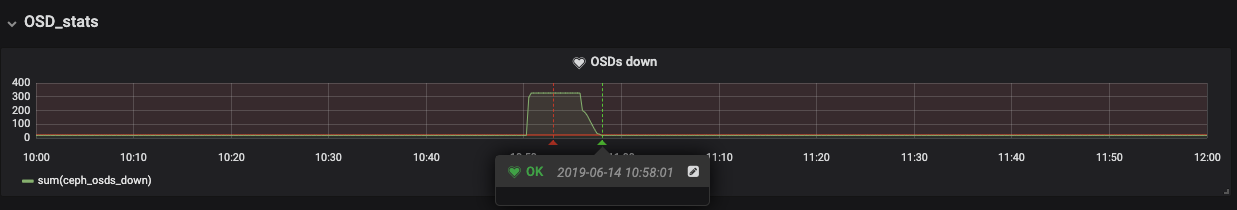

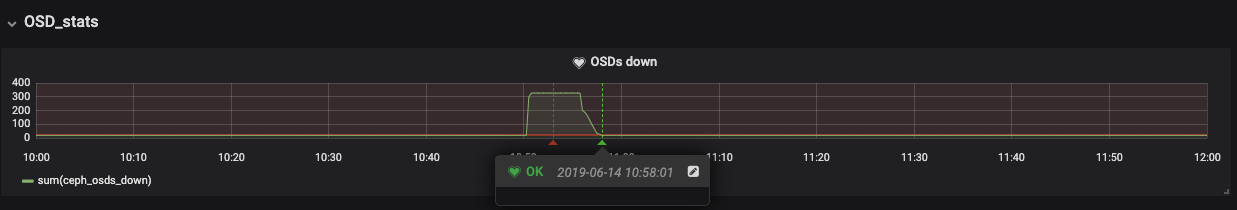

The scenario started at 10:50 a.m. and can clearly be observed in the drop of IOPS, throughput and the increased amount of OSDs in status DOWN and degraded pgs:

The cluster recovered completely within eight minutes. This includes restart of the Bluestore OSDs.

The cluster stayed healthy and available for reading during the whole scenario, even while 2 ceph-mons and ~180 OSDs were down.

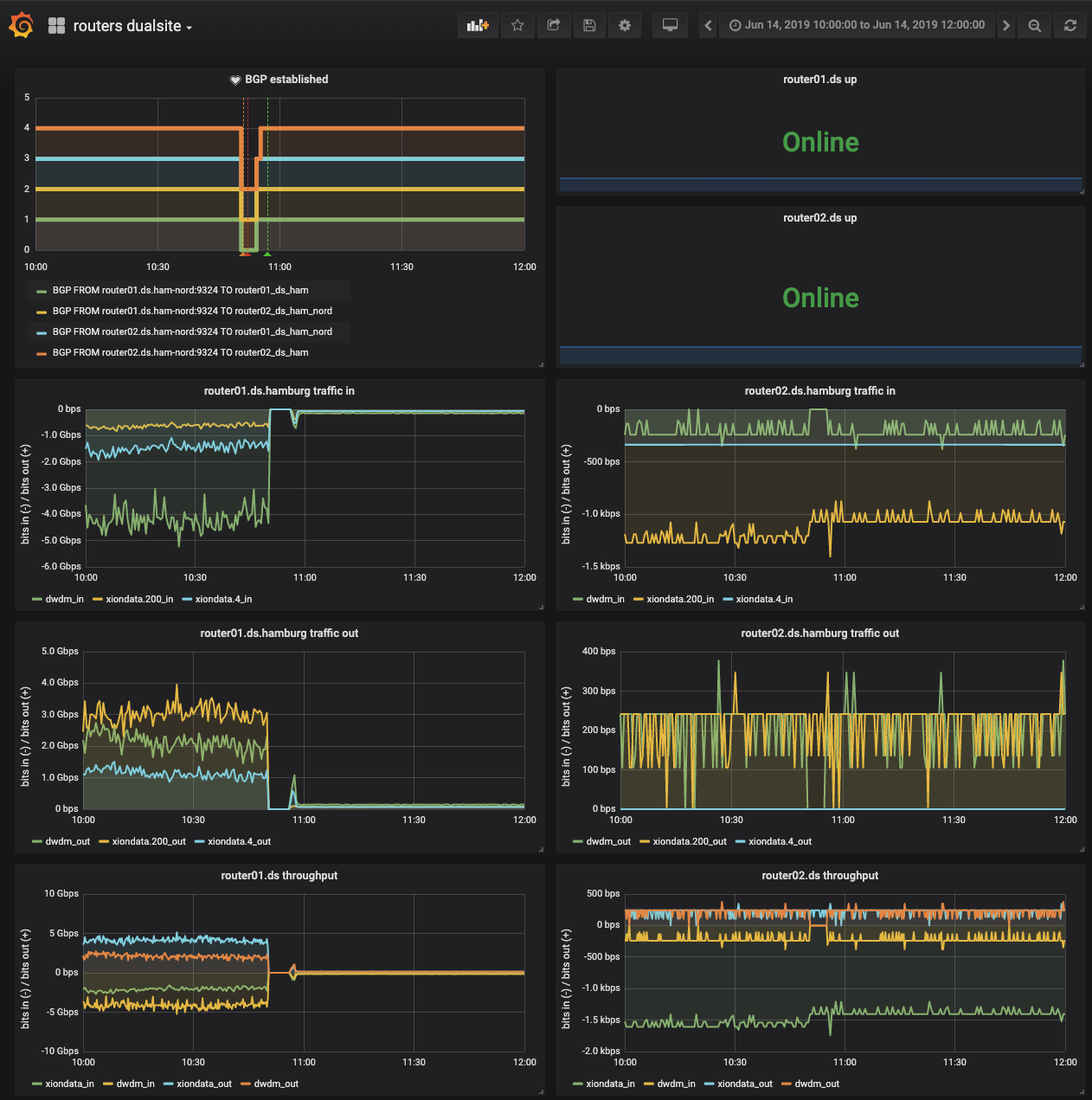

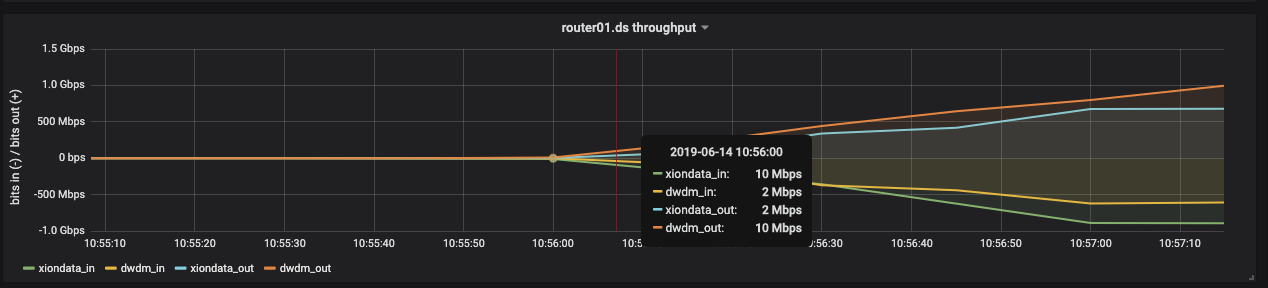

The dual site connection completely recovered within 5 minutes with first intercluster traffic starting to show up one minute later probably caused by slower boot times of nodes.

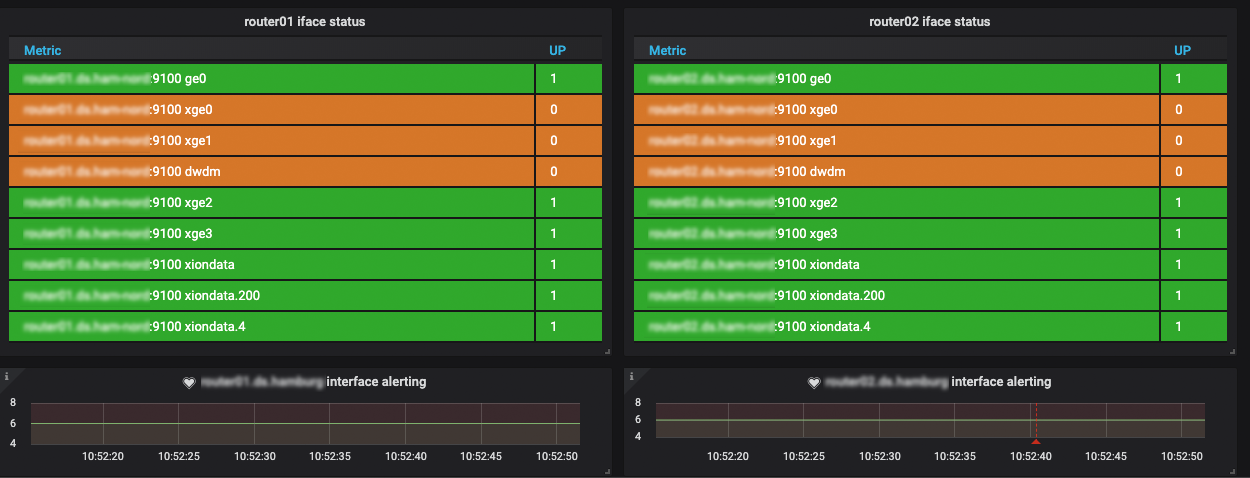

The monitoring of the link status worked and showed that the link to the other routers was down:

4.3 Assessment

The cluster performed admirably and within expected parameters. The Bluestore OSDs recovered faster then Filestore OSDs usually do. As a comparison the reboot of a Filestore node takes 23 minutes for all OSDs of one node to recover after a node restart:

5 | Closing notes

After more then twelve months of high usage we are pleased to find, that the trust we put in Bluestore has not been in vain:

- Bluestore nodes use less memory

- Bluestore nodes show greatly reduced load under stress

- Bluestore OSDs show lower disc utilization, decreasing the likelihood of hanging processes

- Bluestore OSDs have yet to show soft failures like hanging processes

- No Bluestore node so far has become unresponsive

Running Bluestore in production has been a very pleasant ride ![]()